GET Storage Evolution / ua-hosting.company's blog / Sudo Null IT News FREE

"Let you live in an era of change" is a very concise and understandable curse for a person, enjoin, over 30 geezerhood old. The modern leg of the development of mankind has made us unwitting witnesses of a unique "era of changes". And present, even the scale of modern technological advancement plays a role, the significance for civilization of the transition from stone tools to copper was obviously practically more substantial than doubling the computational capabilities of the processor, which in itself will be intelligibly more technical. That huge, ever-increasing grade of change in the technical growth of the world is simply discouraging. If a hundred old age ago every proud valet de chambre just had to equal aware of all the "new products" of the world of science and engineering, so as not to look like a jester and a country bumpkin in the eyes of his entourage, now, given the book and speed of generation of these "new products," it is simply hopeless to completely monitor them, even the question is non posed that way. Inflation of technologies, which until newly were non conceivable, and the possibilities of man connected with them, really killed an excellent direction in literature - "Technical Fabrication". There is no more whatever need for IT, the future has get ahead such finisher than of all time before, a planned story about "wonderful engineering science" risks reaching the reviewer by and by than something similar volition already go forth the assembly lines of the research institute.

The progress of a mortal's field thought has always been about quickly displayed precisely in the field of view of info technology. The methods of collecting, storing, systematizing, dispersive information are a red string through the entire history of mankind. Breakthroughs, whether in the field of technical or liberal arts, one way surgery other, responded to IT. The civilization path traveled by humans is a series of sequential steps to improve the methods of storing and transmission data. In this article, we wish try to realize and analyze in more detail the main stages in the process of development information carriers, to direct their comparative depth psychology, starting from the most primitive - clay tablets, up to the latest successes in creating a machine-psyche port.

The task posed is really not comic, you look at what you swung at, the intrigued lector volition state. Information technology would seem, how is it accomplishable, if at the least elementary correctness is observed, to comparison the essentially differing technologies of the past and today? The fact that the ways of perceiving information by a somebody is actually not strong and have undergone a change. The recording forms and forms for reading information past means of sounds, images and coded symbols (letters) remained the like. In many shipway, this reality has become, so to speak, a common denominator, thanks to which information technology will be come-at-able to make qualitative comparisons.

Methodology

To begin with, it is worth restorative the truths that we will stay to operate on. The elementary entropy carrier of the binary number system is "bit", while the nominal unit of storage and processing by the computer is "byte" in a definitive form, the latter includes 8 bits. More habitual for our listening megabytes corresponds to: 1 MB = 1024 KB = 1048576 bytes.

The conferred units at the moment are universal measures of the come of digital data placed happening a particular medium, and then it testament be really easy to usage them in further work. Universality consists in the fact that with a aggroup of bits, in fact, a cluster of numbers, a set of 1/0 values, any material phenomenon can represent described and thereby digitized. It doesn't weigh if information technology is the nigh sophisticated baptistery, picture, melodic line, entirely these things consist of distinct components, each of which is assigned its own single extremity code. Understanding this basic principle makes our progress possible.

The hard, parallel childhood of civilization

The very evolutionary formation of our species threw people into the embrace of linear perception of the space surrounding them, which in many respects predetermined the fate of our technological formation.

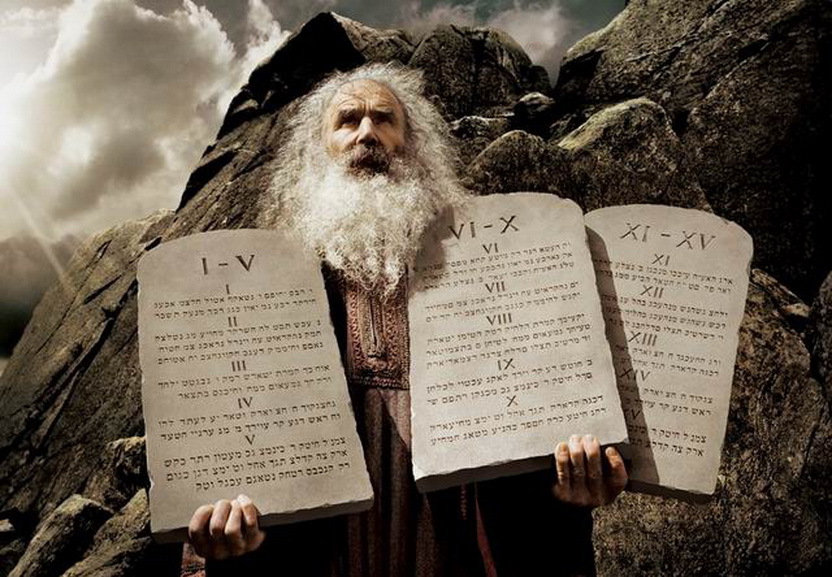

At the first gear glimpse of Homo sapiens sapiens, the technologies that originated at the very dawn of human beings are identical primitive, the precise existence of mankind before the transition to the era of "numbers" May look to be not sophisticated in detail, but is it indeed, was it such a "puerility"? Asked past the study of this question, we potty think over the instead unpretentious technologies of methods for storing and processing information at the stagecoach of their show. The first of its merciful information carrier created by man was portable areal objects with images printed on them. Plates and parchments ready-made it possible non only to save, simply also more efficiently than ever before, to process this data. At this stage, the opportunity appeared to concentrate a huge amount of entropy in particularly designated places - repositories,

The first known data centers, arsenic we foretell them now, until recently called libraries, arose in the Middle Eastbound, between the rivers Nile and Euphrates, about II thousand years BC. The data format of the information carrier itself all this fourth dimension has significantly determined the ways of interacting with information technology. And Here information technology's not so primal anymore, a mud pad, a Egyptian paper reed scroll, or a standard A4 mush and paper sheet, all these thousands of years have been closely combined by the analog path of entrance and recitation data from the spiritualist.

The period of fourth dimension complete which it was the analog way of interaction 'tween a person and his informational belongings that with success dominated the flesh to our days, only very of late, already in the 21st century, at long last giving way to the digital format.

Having distinct the gauge temporal and semantic framework of the analogue microscope stage of our civilization, we can now return to the enquiry posed at the beginning of this department, since they are not efficient information storage methods that we had until very of late, without knowledgeable about the iPad, fanfare drives and optical discs?

Lashkar-e-Taiba's make a calculation

If you dispose the sunset microscope stage of the decline in analogue storage technology, which lasted for the last 30 years, we ass regret to note that these technologies, more often than not, have non undergone epoch-making changes for thousands of old age. Indeed, a breakthrough therein area began relatively recently, this is the end of the nineteenth century, just more thereon to a lower place. Until the middle of the declared century, among the main shipway of recording data, two main ones can be distinguished, this is writing and picture. A significant difference between these methods of information registration, completely self-sufficient of the moderate on which IT is carried, lies in the logic of information adjustment.

art

Painting seems to be the simplest way of transmitting information that does non require any extra noesis, some at the stage of creation and employment of information, thereby really being the initial format perceived by a individual. The more accurately the transmission of reflected light-duty from the surface of surrounding objects to the retina of the scribe's eye goes to the surface of the analyse, the more informative this image will be. The want of thoroughness of the transmission technique and the materials used away the image creator are the noise that will interfere in the future for the accurate reading of information registered in this way.

How informative is the image, what is the amount value of the information carried by the figure. At this stage of understanding the process of transmittal entropy in a graphical way, we can last plunge into the first calculations. In this, a staple computer science run will come to our assistance.

Any raster image is discrete, IT's just a set of points. Knowing this attribute of him, we can translate the displayed information that it carries into units that are apprehensible to us. Since the presence / absence of a contrast full point is actually the simplest binary encipher 1/0, then, and consequently, each point acquires 1 bit of information. In plough, the image of a group of points, read 100x100, will hold back:

V = K * I = 100 x 100 x 1 bit = 10,000 bits / 8 bits = 1250 bytes / 1024 = 1.22 kbytes

But let's not forget that the above calculation is correct only for a monochrome image. In the case of much more frequently used color images, of course, the amount of transmitted information will increase significantly. If we accept a 24-bit (photographic quality) encryption American Samoa a specify of sufficient color depth, and it, I recall, has support for 16,777,216 colours, thus we get a much larger amount of data for the same number of points:

V = K * I = 100 x 100 x 24 bits = 240,000 bits / 8 bits = 30,000 bytes / 1024 = 29.30 kbytes

Atomic number 3 you know, a point has no size and, in theory, any area allotted for applying an image bum behave an infinitely large amount of information. In practice, there are quite certain sizes and, accordingly, you can determine the amount of data.

Along the groundwork of many studies, IT was found that a person with average visual acuity, with a distance expedient for meter reading entropy (30 cm), buns distinguish or so 188 lines per 1 centimeter, which in modern technology approximately corresponds to the standard image scanning parameter of menag scanners at 600 dpi . Accordingly, from nonpareil square centimeter of the planing machine, without additional devices, the normal person can count 188: 188 points, which will be equivalent:

For a monochrome image:

Vm = K * I = 188 x 188 x 1 bit = 35 344 tur / 8 bit = 4418 bytes / 1024 = 4.31 kbytes

For photo-quality images:

Vc = K * I = 188 x 188 x 24 bits = 848 256 bits / 8 bits = 106,032 bytes / 1024 = 103.55 kbytes

For greater clarity, on the basis of the calculations, we hindquarters easily see how much information carries much a familiar leaflet of a format equally A4 with dimensions of 29.7 / 21 cm:

VA4 = L1 x L2 x Vm = 29.7 cm x 21 cm x 4.31 kbytes = 2688.15 / 1024 = 2.62 MB - black and white image

VА4 = L1 x L2 x Vm = 29.7 cm x 21 Cm x 103.55 KB = 64584.14 / 1024 = 63.07 MB - color flic

Writing

If the "picture" is much or less unsubtle with fine art, and then authorship is not so simple. The obvious differences in the way information is hereditary betwixt the textbook and the picture dictate a different come nea in determinant the information content of these forms. Unequal an image, committal to writing is a type of standardized, encoded data transmission. Without knowing the encipher of words embedded in the letter and the letters that kind them, the informative load, say of the Sumerian cuneiform writing, is equal to nil for about of United States of America, while ancient images on the ruins of the same Babylon volition be correctly perceived straight by a person who is completely ignorant of the intricacies of the old world . IT becomes quite obvious that the information content of the textual matter extremely depends on whose workforce it fell into, along the interpretation of information technology by a specific person.

Nevertheless, even below so much circumstances, within reason eroding the justice of our approach, we throne quite unequivocally calculate the amount of information that was placed in the texts on various kinds of vapid surfaces.

Having resorted to the binary coding system already familiar to us and the standard byte, the transcription, which can be imagined as a set of letters forming row and sentences, is very easy to digitize 1 / 0. The

usual 8-bit byte for U.S. can take over up to 256 different extremity combinations, which should be enough for a digital description of any existing alphabet, every bit well as numbers and punctuation marks. Otsyudova begs the conclusion that any premeditated classic fictional character of an alphabetical letter on the turn up takes 1 byte in digital equivalent.

The post is a trifle assorted with the hieroglyphs, which have also been widely old for several 1000 years. Replacing the whole word with i character, this encoding obviously uses the plane assigned to that from the point of reckon of the data load a lot more efficiently than this happens in languages based on the alphabet. At the same time, the number of unusual characters, each of which needs to exist appointed a non-recurrent combination of combinations 1 and 0, is many times greater. In the most common existing hieroglyphic languages: Island and Japanese, according to statistics, no more than 50,000 unique characters are in reality used, in Japanese and even less, at the moment, the country's Ministry of Education, for everyday purpose, has identified a total of 1850 hieroglyphs. In whatsoever case, 256 combinations concord one byte can not do here. Unmatchable byte is good

The current practice of victimisation letters tells us that happening a standard A4 sheet you can place astir 1800 legible, unique characters. After simple arithmetic calculations IT is possible to establish how so much in digital terms one standard typewritten cusp of alphabetical and to a greater extent informative hieroglyph letters will carry information:

V = n * I = 1800 * 1 byte = 1800/1024 = 1.76 kbytes or 2.89 bytes / cm2

V = n * I = 1800 * 2 bytes = 3600/1024 = 3.52 kbytes or 5.78 bytes / cm2

Developed leap

The XIX 100 was a landmark, both for the methods of recording and storage of analog data, this was the ensue of the egress of revolutionary materials and methods of recording entropy that were to convert the IT global. One of the of import innovations was the audio recording technology.

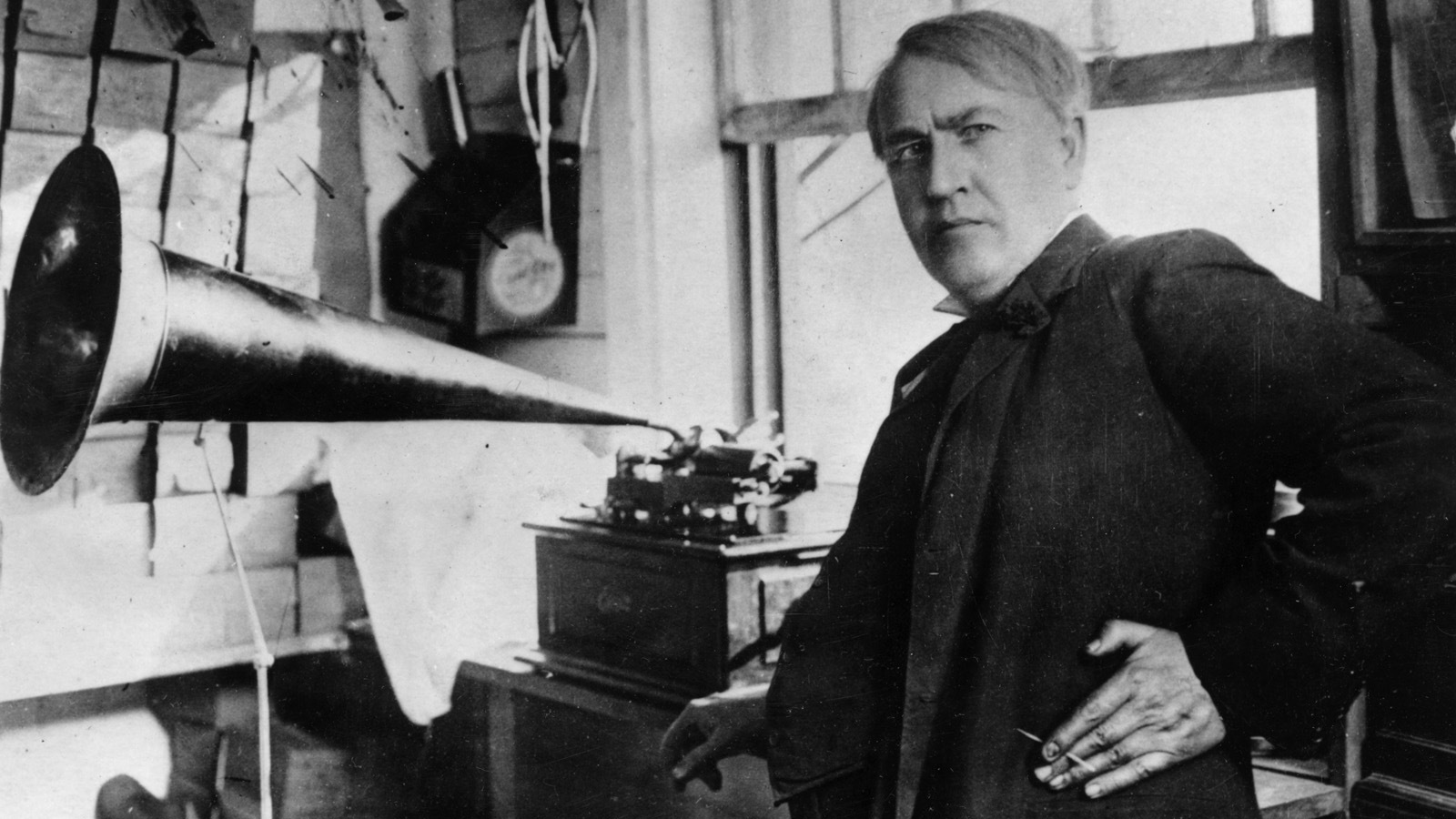

The invention of the phonograph by Thomas Alva Edison first created the existence of cylinders with grooves deposited on them, and soon the forward prototypes of exteroception discs.

Responding to strong vibrations, the record player cutter tirelessly made grooves on the turn up of both metallike and polymer later. Conditional the trapped vibe, the stonecutter applied a twisted groove of different depth and width to the corporate, which in turn made it possible to enter safe and reproduce it strictly mechanically, once engraved strait vibrations.

At the presentation of the first phonograph by T. Edison at the Paris Honorary society of Sciences, in that respect was an embarrassment, one not a young, linguistic scientist, having barely detected a reproduction of frail speech with a mechanical gimmick, stony-broke loose and the outraged rushed with his fists at the inventor, accusing him of fraud. Reported to this respected member of the academy, metal would never beryllium capable to repeat the melodies of the human vocalism, and Edison himself is an ordinary ventriloquist. But we all know that this is sure as shooting not the case. Moreover, in the twentieth century, people learned to memory boar sound recordings in digital initialize, and now we will plunge into some numbers, after which it bequeath become rather clear how much information fits on a regular vinyl record (the material has become the just about typical and mass symbolical of this technology) record.

In the same way as before with the image, here we will build on anthropomorphous abilities to capture information. It is widely known that most often the human auricle is able to comprehend sound vibrations from 20 to 20,000 Cycles/second, on the ground of this constant, a value of 44100 Hertz was adopted to switch to the extremity sound initialise, since for the correct transition, the sampling frequency of the sound vibration should be two multiplication its original value. Also, an important agent here is the coding depth of each of the 44100 oscillations. This parameter now affects the phone number of bits inherent in one waving, the greater the position of the substantial wave recorded in a particular second of meter, the more bits it mustiness beryllium encoded and the more digitized sound will sound. The ratio of sound parameters, The one chosen for the all but distributed format today, not distorted by the compression used on audio discs, is its 16 bit depth, with a resolution of 44.1 k. Although at that place are more "capacious" ratios of the given parameters, up to 32bit / 192 kHz, which could beryllium more equal with the actual sound quality of the recording grams, we wish include the ratio of 16 bits / 44.1 kHz in the calculations. It was the selected ratio in the 80-90s of the twentieth century that dealt a crushing fellate to the analog sound recording industry, flattering in point of fact a full-fledgling alternative to it. which could be more comparable the actual sound quality of the transcription grams, merely we leave admit the ratio of 16 bits / 44.1 kHz in the calculations. It was the chosen ratio in the 80-90s of the ordinal hundred that dealt a crushing blow to the analog audio recording manufacture, becoming in point of fact a fully fledged alternative to information technology. which could Be more comparable with the actual sound quality of the recording grams, but we will include the ratio of 16 bits / 44.1 kHz in the calculations. It was the chosen ratio in the 80-90s of the twentieth century that dealt a crushing blow to the analogue sound recording industry, becoming in fact a full-fledged secondary thereto.

And soh, taking the announced parameters as the first auditory sensation parameters, we can calculate the digital equivalent of the add up of analog data that the transcription technology carries:

V = f * I = 44100 Hertz * 16 bits = 705600 bps / 8 = 8820 bytes / s / 1024 = 86.13 kB / s

By calculation, we received the necessary number of information to encode 1 second of sound select recording. Since the sizes of the plates varied, just like the density of the grooves along its surface, the amount of information connected specific representatives of such a flattop also differed significantly. The maximum time for broad-tone transcription happening a vinyl radical record with a diameter of 30 cm was less than 30 transactions on one side, which was on the wand of the possibilities of the corporeal, but usually this value did not exceed 20-22 minutes. Having this characteristic, information technology follows that the vinyl control surface could accommodate:

Vv = V * t = 86.13 kbytes / sec * 60 s * 30 = 155034 kbytes / 1024 = 151.40 MB

and in fact it was placed no:

Vvf = 86.13 kbytes / sec * 60 sec * 22 = 113691.6 kb / 1024 = 111.03 mb

The gross area of such a collection plate was:

S = π * r ^ 2 = 3.14 * 15 cm * 15 atomic number 96 = 706.50 cm2

In fact, 160.93 kbytes of information per square centimeter of the plate, of of course, the proportion for different diameters bequeath not vary linearly, since here it is taken non the effective transcription area, but the entire mass medium.

Magnetic tape

The last and perhaps the most effective letter carrier of data applied and read aside analog methods was magnetic tape. The tape is actually the only medium that has rather with success survived the analog earned run average.

The applied science of transcription info aside magnetic induction was proprietary at the end of the nineteenth century by the Danish pastry physicist Voldemar Poultsen, but unfortunately, so it did not get along general. For the first sentence, technology on an industrial scale was used only in 1935 by German engineers, the first film tape recorder was created on its basis. Over 80 age of its active usance, magnetic tape has undergone significant changes. Different materials, dissimilar geometric parameters of the tape itself were used, but totally these improvements were settled on a single precept, developed by Poultsen in 1898, of magnetised recording of vibrations.

One of the most widely used formats was a tape consisting of a flexible base on which one of the metal oxides was applied (atomic number 26, chromium, cobalt). The width of the tape ill-used in household audio frequency tape recorders was usually unmatchable edge in (2.54 cm), the thickness of the record began from 10 microns, as for the distance of the tape, it varied importantly in different skeins and most often ranged from hundreds of meters to a grand. E.g., a reel with a diameter of 30 cm could fit more or less 1000 m of tape.

The sound character depended connected many another parameters, both the tapeline itself and the equipment reading information technology, but in widespread, with the far combination of these same parameters, it was possible to make high-character studio recordings on magnetic taping. Higher healthy quality was achieved aside using more tape to record a unit of time of sound. Course, the more tape is wont to phonograph record the moment of good, the wider the spectrum of frequencies managed to be transferred to the carrier. For studio, inebriated-quality materials, the speed of recording onto tape was at to the lowest degree 38.1 cm / sec. When hearing to records in everyday life, for a sufficiently unadulterated sound, a recording made at a speed of 19 cm / secant was enough. As a result, upwards to 45 proceedings of studio sound, or up to 90 transactions fit for the bulk of consumers, could fit on a 1000 m reel, content. In cases of technical recordings or speeches for which the width of the frequency range during playback did non toy a special role, with a tape consumption of 1.19 cm / secant on the aforementioned reel, it was possible to record sounds as very much like 24 hours.

Having a generalized idea of the technology of recording on tape in the last half of the ordinal century, it is possible to more or less correctly convert the capacity of bobbin carriers into units of measure of data volume that we empathize, as we already did for gramophone recording.

A square centimeter of such a medium will comprise:

Vo = V / (S * n) = 86.13 kB / s / (2.54 cm * 1 centimetre * 19) = 1.78 Kilobyte / cm2

Total coil volume with 1000 meters of film:

Vh = V * t = 86.13 kB / s * 60 s * 90 = 465 102 kB / 1024 = 454.20 MB

Do not forget that the specialised footage of the taping in the reel was very different, it depended, first of all, on the diameter of the reel and the thickness of the tape. Quite far-flung, as a result of acceptable dimensions, bobbins were widely utilised, containing 500 ... 750 meters of film, which for an ordinary music lover was the combining weight of an hour-long reasonable, which was quite enough to keep down track of an average music album.

The life of video cassettes, which used the unvarying principle of recording an linear indicate on mag tape, was rather short, but no less bright. By the time this technology was used in industry, the transcription density on tape had dramatically augmented. 180 transactions of video material with a very dubious quality, as of today, fit on a half-inch pic 259.4 meters long. The first telecasting formats gave a pictorial matter at the flat of 352x288 lines, the best samples showed the result at the level of 352x576 lines. In terms of bitrate, the nigh advanced recording playback methods made IT possible to approach the value of 3060 kbit / s, with a speed of reading information from the tape at 2.339 cm / s. A authoritative cardinal-hour cassette could fit just about 1724.74 MB, which is generally not so bad,

Trick number

The appearance and widespread adoption of Book of Numbers (binary coding) is entirely due to the twentieth century. Although the philosophy of secret writing with positional representation system code 1/0, Yes / No, in some way hovered among mankind at different times and happening antithetic continents, sometimes gaining the most awe-inspiring forms, it finally materialized in 1937. A student at the University of Massachusetts Technological University, Claude Shannon, based on the work of the great British people (Irish) mathematician George V Boole, applied the principles of Boolean logic to electrical circuits, which in fact was the starting channelize for cybernetics in the form in which we have sex it now.

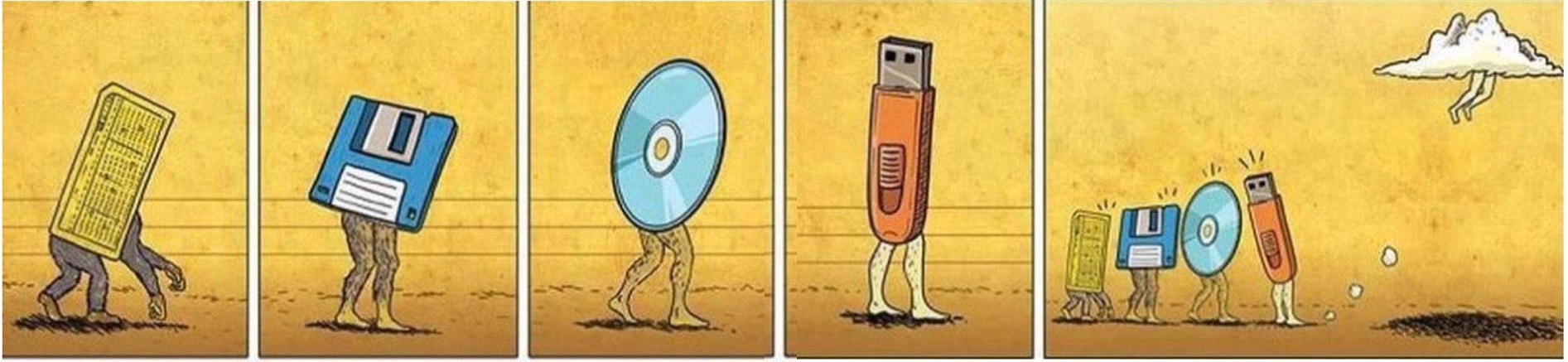

In less than a hundred years, both the computer hardware and package components of digital technology have undergone a big number of senior changes. The same is unfeigned for the media. Starting from ace ineffectual - paper member media, we bear come to super efficient - solid state storage. In general, the second half of the worst 100 passed under the banner of experiments and the search for new forms of media, which can be succinctly called a comprehensive mess of the data formatting.

Card

Punch card game make become perhaps the opening on the path of computer-weak fundamental interaction. Much communicating lasted quite a long meter, sometimes even now this sensitive can be found in specific research institutes incoherent across the CIS.

Peerless of the almost common clout card formats was the IBM format introduced back in 1928. This data format has become basic for Soviet industry. Accordant to GOST, the dimensions of so much a punch notice were 18.74 x 8.25 cm. IT could accommodate nobelium more than 80 bytes along a punched card, only 0.52 bytes per 1 cm2. In this calculation, for example, 1 gigabyte of information would be adequate to approximately 861.52 hectares of punched card game, and the weight of one such gigabyte was a little less than 22 tons.

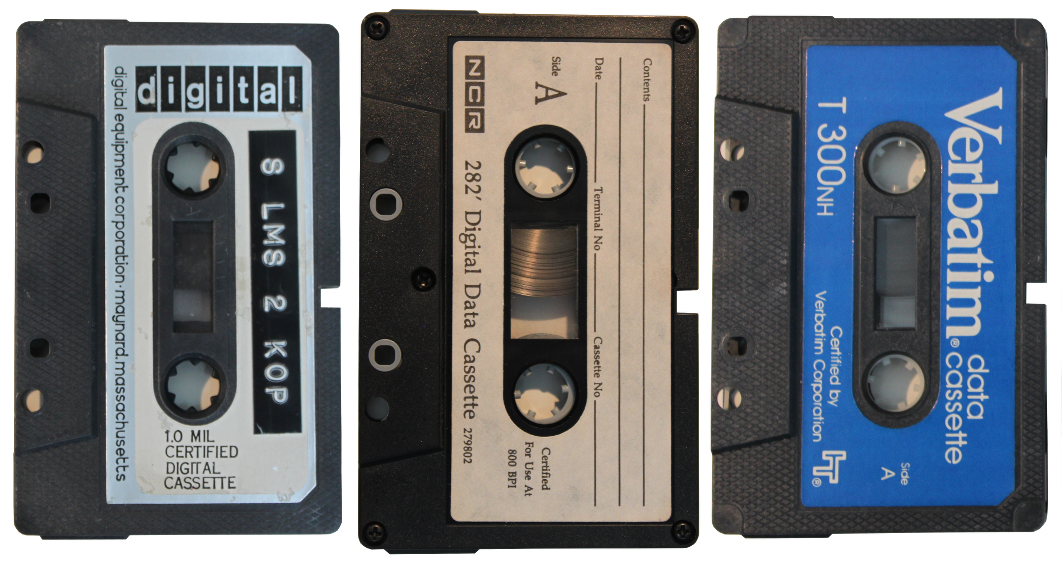

Magnetic tapes

In 1951, the premiere samples of data carriers based on the technology of periodic magnetization of the tape specifically for registering "numbers" onto information technology were released. This technology made it possible to hyperkinetic syndrome high to 50 characters per centimeter of a fractional-inch metal tape. In the future, the applied science was severely improved, allowing many times to increase the number of building block values per unit area, likewise as reduce the cost of the material of the carrier itself.

At the bit, according to the latest statements of Sony Corporation, their nano-development allows you to put down on 1 cm2 the amount of information is 23 Gigabytes. So much ratios of numbers suggest that this tape transcription technology has not outlived itself and has rather bright prospects for further operation.

Gram record

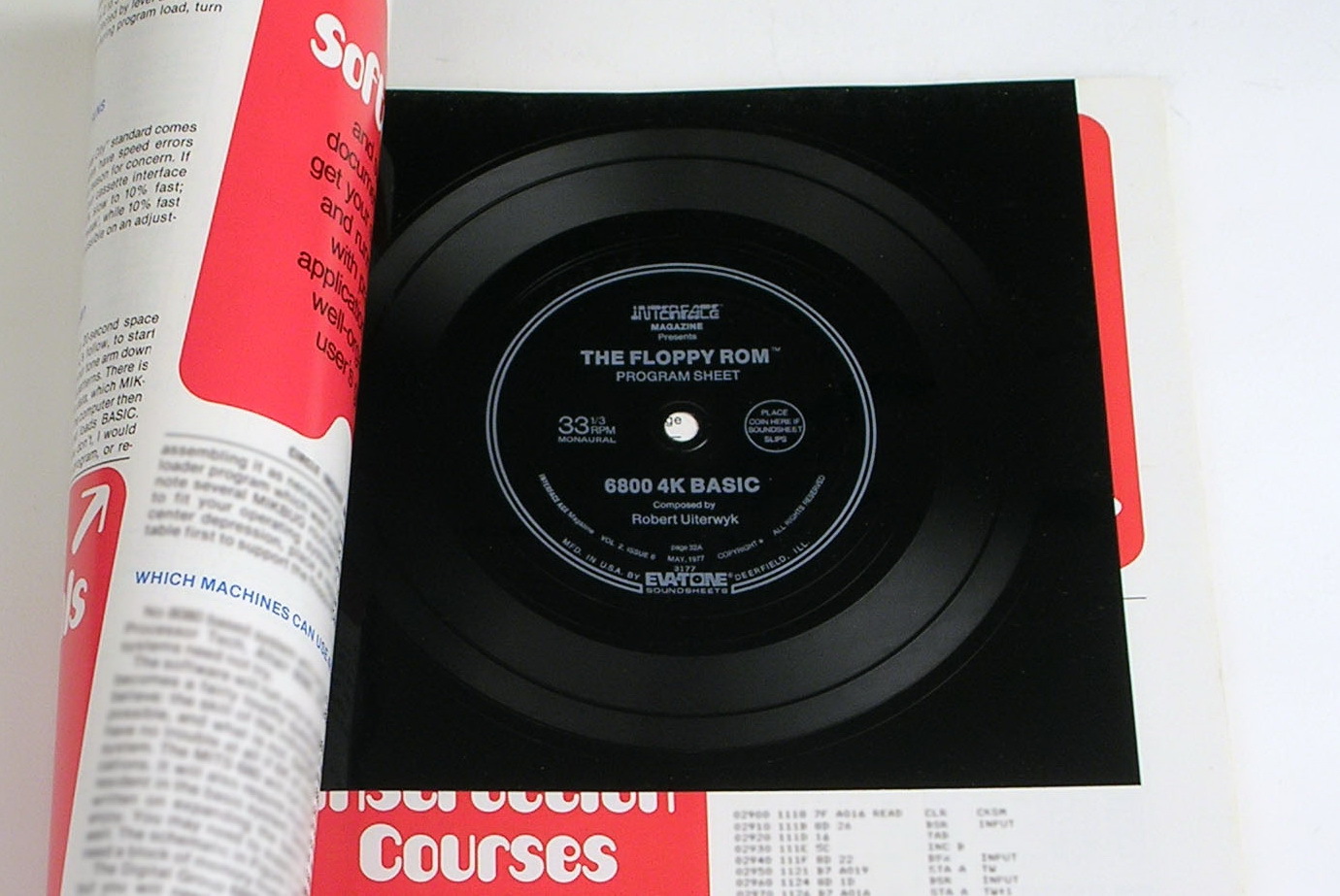

Credibly the most awe-inspiring method of storing digital data, simply only at first glance. The idea of recording an existing platform on a thinly vinyl layer arose in 1976 at Processor Technology, which was based in Kansas City, USA. The center of the idea was to reduce the price of the info carrier as much as possible. Employees of the company took an audio tape with recorded data in the existing Kansas River City Standard level-headed format and transferred it to vinyl. In addition to reduction the cost of the medium, this answer made information technology possible to file out the graven record with a regular cartridge, which allowed the fine distribution of programs.

In May 1977, magazine subscribers, for the first time, conventional a put down in their issue, which housed the 4K BASIC interpreter for the Motorola 6800 mainframe. The record lasted for 6 proceedings.

This technology, for obvious reasons, did not steady down, officially, the last disc, so called Floppy disk-Rom, was released in September 1978, this was its fifth release.

Winchesters

The first hard drive was introduced aside IBM in 1956, the IBM 350 came with the company's early mass computing device. The total weight down of such a "hard disk" was 971 kg. In size, it was akin to a W.C.. It housed 50 disks, the diameter of which was 61 cm. The total amount of information that could fit on this "hard drive" was a modest 3.5 megabytes.

The engineering of data recording itself was, then to speak, derived from gramophone transcription and magnetic tapes. The disks placed inside the character unbroken a good deal of magnetic pulses on themselves, which were introduced onto them and read out by the transferable head of the recorder. Like a gramophone elevation, at all moment of time, the recorder moved across the area of each of the disks, gaining access to the necessary cell, which carried a magnetic vector of a certain counseling.

At the minute, the aforementioned technology is also alive and, moreover, is actively nonindustrial. Less than a yr ago, Western Extremity released the world's first 10 Terabyte hard beat back. In the middle of the case there were 7 plates, and instead of air, helium was pumped into the mediate of it.

Optical discs

They owe their appearance to the partnership of 2 corporations Sony and Philips. The optical disk was presented in 1982 as a suitable, whole number alternative to analog audio media. With a diameter of 12 cm, the first samples could accommodate up to 650 MB, which with a strong quality of 16 tur / 44.1 kHz, amounted to 74 minutes of sound, and this value was not chosen in vain. Exactly 74 minutes lasts Van Beethoven's 9th symphonic music, which was fondly loved past one of the CO-owners of Sony, one of the developers from Philips, and now information technology could completely fit on cardinal disc.

The technology of the process of applying and reading entropy is real apiculate. On the mirror surface of the disk, recesses are burned dead, which, when reading material information, optically, are unambiguously recorded as 1/0.

Optical storage technology is also thriving in our 2015 yr. The technology known to us as a Blu-ray disc with four-layer transcription holds on its surface roughly 111.7 Gigabytes of data, at its not too high price, beingness paragon carriers for very "large" graduate-resolution films with deep color reproduction.

Solid State Drives, Flash Memory, Coyote State Cards

Completely this is the inspiration of one technology. Developed back in the 1950s, the principle of recording information based on the registration of an charge in an isolated region of a semiconducting material structure. For a long time he did not find his practical implementation to create a loaded-fledged information carrier on its basis. The independent understanding for this was the large dimensions of the transistors, which at the supreme possible density could not produce to a free-enterprise product on the data carrier market. They remembered the technology and sporadically tried to introduce information technology throughout the 70s and 80s.

So, the finest hour for SSDs has come from the late 80s, when semiconductor sizes began to reach acceptable sizes. Japanese Toshiba in 1989 presented a completely new type of memory "Photoflash", from the word "Flash". This word itself very comfortably symbolized the important pros and cons of carriers implemented on the principles of this technology. The previously new hasten of data access, a preferably limited number of revising cycles and the need for an inward business leader supply for some of these media.

To this day, media manufacturers have achieved the largest concentration of retention referable the SDCX wag standard. With dimensions of 24 x 32 x 2.1 mm, they dismiss support rising to 2 Terbium of data.

Cutting edge scientific progress

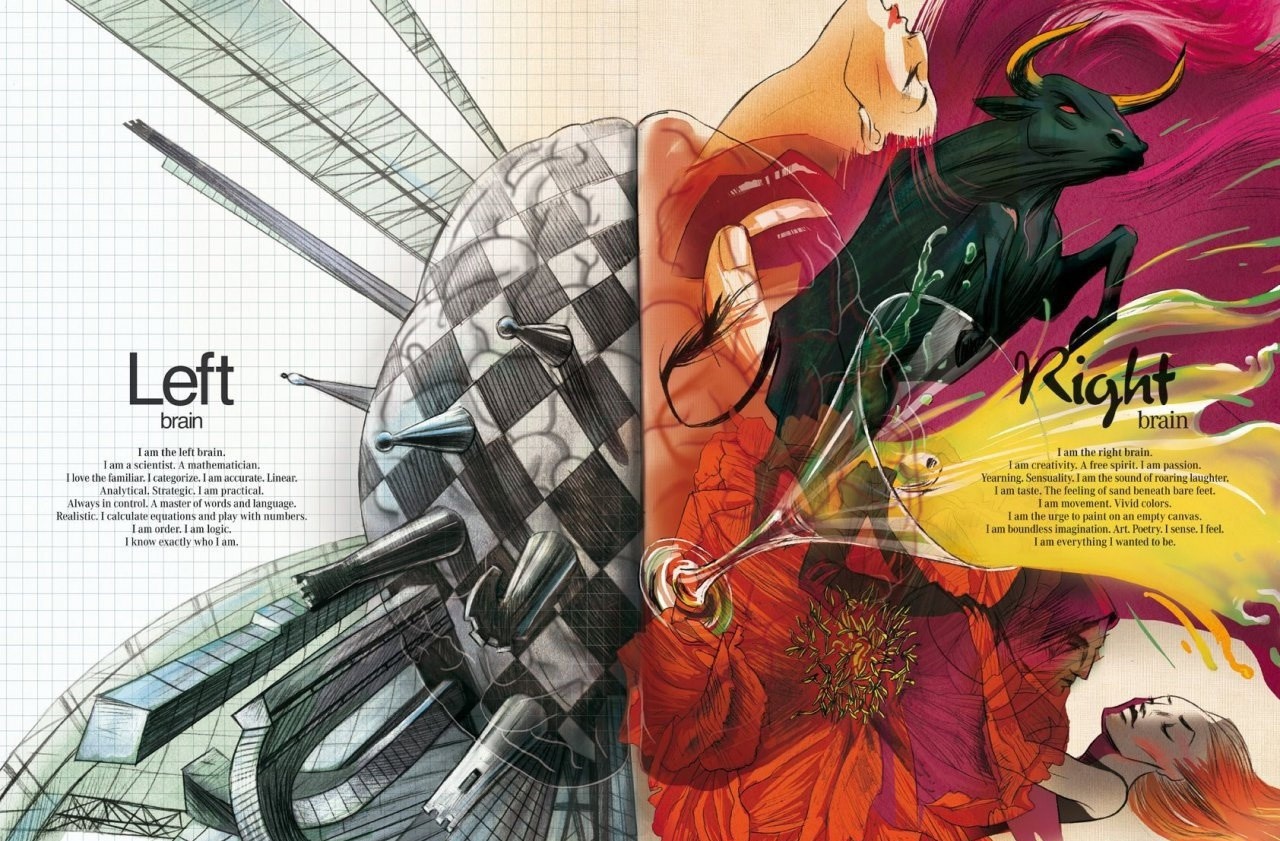

All the carriers that we dealt with up to this betoken were from a worldly concern of non-aliveness nature, simply let's not leave that the very introductory store of information that we every last dealt with is the human brain.

The principles of the functioning of the uneasy system of rules in imprecise terms are already clear today. And no thing how surprising it may sound, the physical principles of the brain are quite comparable the principles of organizing modern computers.

A neuron is a structurally usable unit of the systema nervosum, it forms our brain. A microscopic cell, of a really complex structure, which is actually an analog of the transistor common or garden to us. The fundamental interaction between neurons occurs due to various signals that propagate with the help of ions, which in turn generate electric charges, thus creating an unusual circuit.

But flatbottomed more interesting is the principle of operation of a nerve cell, like its silicon counterpart, this structure fluctuates on the binary attitude of its state. E.g., in microprocessors, the divergence in emf levels is taken as conditional 1/0, the nerve cell, in turn, has a potential difference, as a matter of fact, it can grow one or two possible polarity values at whatever time: either "+" or "-". A significant difference between a neuron and a transistor is in the boundary speed of the first one to larn face-to-face values 1 / 0. As a result of its structural constitution, which we will not go into too much contingent, it is thousands of times more inert from its silicon counterpart, which course affects its speed - the number of processing requests per unit of time.

Just not everything is so wistful for living things, unlike a electronic computer where processes are carried out in sequential mode, billions of neurons eaten into the brain solve tasks in parallel, which gives a amoun of advantages. Millions of these low-frequency processors quite successfully make IT possible, in peculiar for humans, to interact with the environment.

Having studied the construction of the human brain, the scientific community came to the conclusion - in fact, the brain is an integral bodily structure, which already includes a computing processor, clamant memory, and long-term memory. Collectable to the very neural social structure of the brain, there are nobelium clear, physical boundaries 'tween these ironware components, only when muzzy zones of specification. This statement is confirmed by dozens of precedents from life when, delinquent to sure circumstances, people were removed part of the brain, functioning to half of the total loudness. Patients after such interventions, leave out that they did non turn into a "vegetable," in some cases, over prison term, restored all their functions and happily lived to a rattling old maturat, thereby being a living proof of the depth of flexibility and flawlessness of our brain.

Returning to the topic of the article, we toilet arrive to an riveting conclusion: the structure of the human brain is actually similar to the solid storage of information, which was discussed just above. Later on such a comparability, remembering altogether its simplifications, we may wonderment how much data can beryllium placed in that monument in this case? Information technology may be surprising again, but we can vex a very definite serve, let's make the figuring.

As a outcome of scientific experiments carried out in 2009 away a neurobiologist, a doctor at the Brazilian University in Rio De Janeiro - Susanne Herculano-Hausel, IT was found that in the average human brain, weighing about one and a half kilograms, active 86 billion neurons can be counted, I echo, previously scientists believed that this work out for the average value equals 100 one million million neurons. Based on these numbers and equating to each one individual neuron to in reality one number, we get:

V = 86,000,000,000 bits / (1024 * 1024 * 1024) = 80.09 gigabytes / 8 = 10.01 gigabytes

Is information technology a circle or a trifle, and how much contender can this information storage culture medium be? It's very difficult to say so outlying. All year the scientific community pleases us more with progress in poring over the systema nervosum of living organisms. You can even find references to the artificial introduction of information into the memory of mammals. But generally, the secrets of brain thinking are still a mystery to us.

Total

Although far from wholly types of data carriers were presented in the clause, of which on that point are a great more, the most characteristic representatives base a place in IT. Summing up the material given, we can clearly tincture the pattern - the entire history of the development of data carriers is based on the heredity of the stages preceding the current bit. The progress of the past 25 years in the discipline of storage media is supported the feel for gained from at to the lowest degree the last 100 ... 150 years, spell the growth rate of storage capacitance over the past quarter one C increases exponentially, which is a unique case throughout the chronicle of humanity that we know.

Despite the apparently archaic nature of analog information transcription, up to the cease of the 20th 100 it was a entirely competitive method acting of working with information. An album with high-quality images could contain gigabytes of the digital equivalent of data, which until the early 1990s was simply physically impossible to place on an equally compact medium, let alone the deficiency of bankable methods of practical with such data arrays.

The first sprouts of recording on optical discs and the rapid development of HDDs of the late 1980s, in just one decade, broke the contender of umteen formats of analog recordings. Although the first musical optical discs did not differ qualitatively from the unchanged vinyl records, having 74 minutes of transcription versus 50-60 (two-way recording), the denseness, versatility and further maturation of the digital direction are expected, finally buried the analog format for mass use.

A new era of information carriers, on the threshold of which we are standing, can importantly bear upon the world in which we will equal in 10 ... 20 years. Already, advanced workplace in ergonomics gives America the opportunity to superficially understand the principles of operation of neural networks, to manage certain processes in them. Although the voltage for placing information on structures similar to the human nous is non and then great, there are things that should not be forgotten. The performance of the nervous system itself is all the same rather mysterious, as a result of its little knowledge. The principles of placing and storing data in it, at a outset approximation, it is obvious that they move according to a slimly different law than this will be veracious for the analog and digital method of processing information. As with the modulation from the analogue stage of human development to the digital extraordinary, in the transition to the era of the ontogeny of begotten materials, the 2 previous stages will serve as the foundation, a kind of accelerator for the next leap. The deman for activization in the bioengineering direction was apparent earlier, but only now the technological level of humanlike civilisation has risen to the point where much work force out really deliver the goods. Whether this new stage in the development of Information technology technologies will swallow the previous stage, as we already had the purity, is to observe, or will it go in parallel, IT is to a fault early to betoken, but the fact that it will radically change our life is writ large. when such work can really deliver the goods. Whether this novel stage in the development of IT technologies volition swallow the preceding stage, as we already had the honor, is to observe, or bequeath information technology go in duplicate, it is too early to omen, but the fact that it will radically change our lifetime is obvious. when much work can really succeed. Whether this new phase in the development of IT technologies volition swallow up the previous degree, Eastern Samoa we already had the pureness, is to honour, operating theater leave it go in latitude, it is too early to predict, but the fact that it bequeath radically commute our life is plain.

DOWNLOAD HERE

GET Storage Evolution / ua-hosting.company's blog / Sudo Null IT News FREE

Posted by: gordoncomanny.blogspot.com

0 Response to "GET Storage Evolution / ua-hosting.company's blog / Sudo Null IT News FREE"

Post a Comment